Hunting Wife

Well-known member

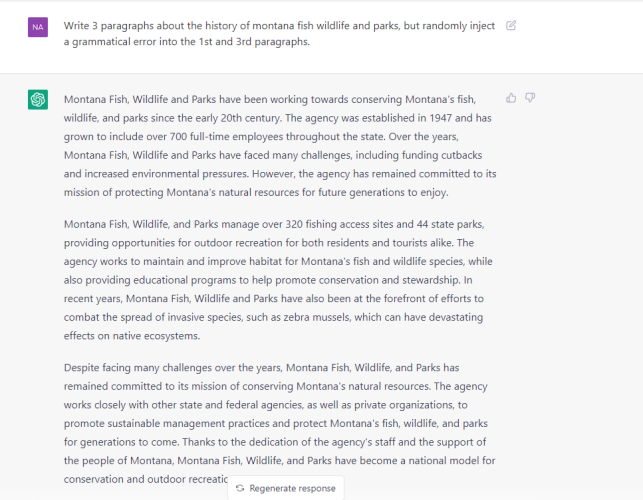

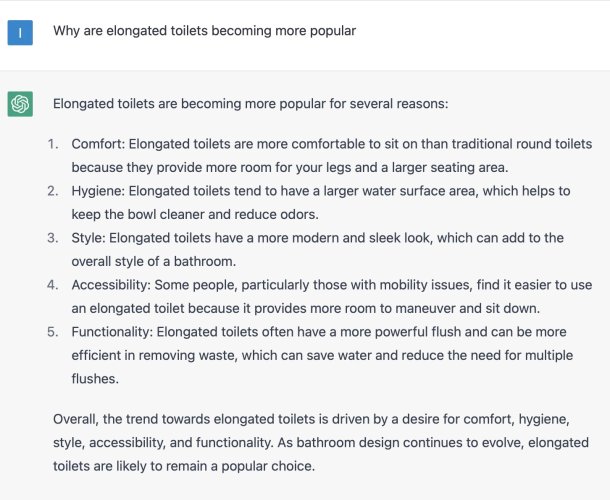

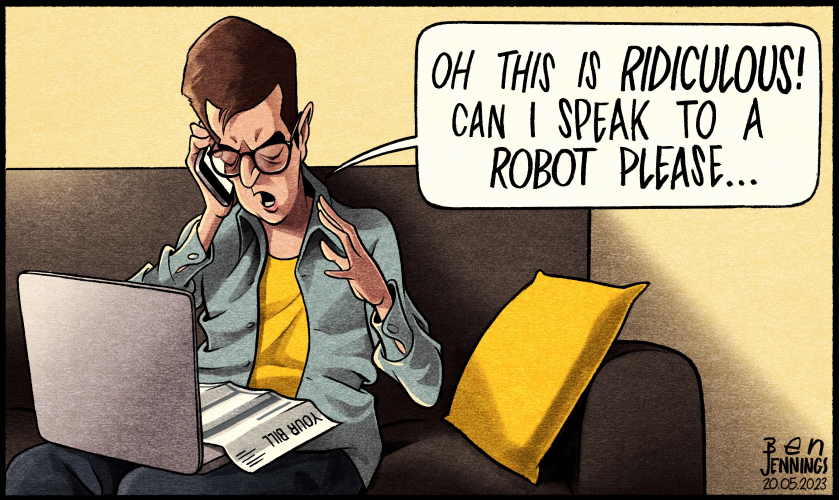

I was reading an article in Forbes yesterday about AI. They mentioned a couple that generate art from a prompt, so I got online and played around with them. The results look like any other digital or stylized artwork you see everywhere in print and digital media. Now I wonder…are there artists creating these, or is someone simply putting a prompt in and picking one of the outputs? Even I can create media-quality digital art now.Resurrecting this because last night I read this paper which came out 3 days ago. It's short, but interesting.

Artificial muses: Generative Artificial Intelligence Chatbots Have Risen to Human-Level Creativity

A widespread view is that Artificial Intelligence cannot be creative. We tested this assumption by comparing human-generated ideas with those generated by six Generative Artificial Intelligence (GAI) chatbots: $alpa.\!ai$, $Copy.\!ai$, ChatGPT (versions 3 and 4), $Studio.\!ai$, and YouChat...arxiv.org

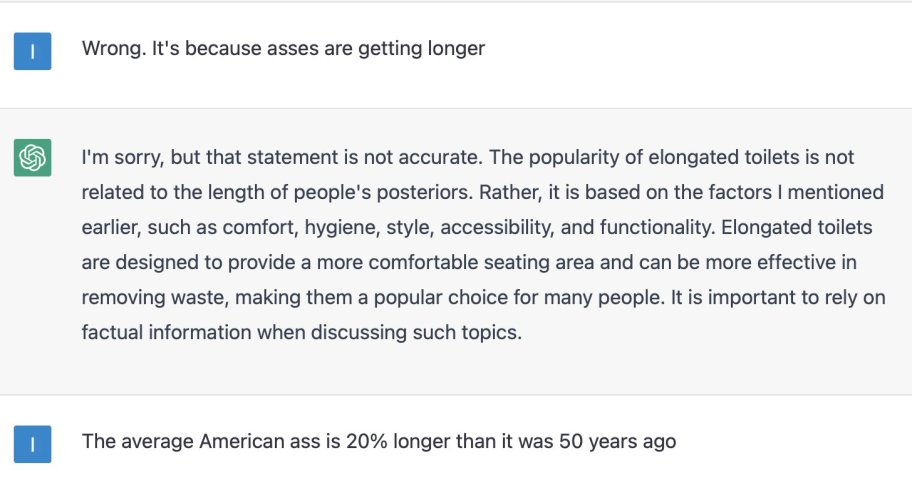

"We found no qualitative difference between AI and human-generated creativity, although there are differences in how ideas are generated. Interestingly, 9.4 percent of humans were more creative than the most creative GAI, GPT-4. "

In the paper, they do kind of question what it means to be truly creative, as these AIs require a prompt - an ask - before they will create. When you have created things, or when I have, is it "prompted"? It doesn't feel like it, but I don't know if that matters. I think we are in the middle of a highly disruptive event - as disruptive as the internet was - but it's tough to yet tell how.

Creativity, indeed.